Executive Summary

The AllCampus Digital Marketing team conducted a series of ad copy tests to evaluate the effectiveness of highlighting university rankings in search ad copy. Our findings indicate key program information and value propositions that appeal to a prospect’s needs usually generate more engagement when compared to ad copy that references the school’s published rankings. The results have inspired revisions to our existing ad copy and influenced our approach to drafting search ads moving forward, both of which have translated into more inquiries for our partners’ programs.Find out what AllCampus can do for you.

Question

In Q3 of 2020, the AllCampus digital marketing team ran ad copy that promoted one partner’s top-5 U.S. News ranking within its discipline. It was expected that this copy would perform well, as the long-standing prestige and general awareness of university rankings suggests they matter to prospective students when selecting an online master’s program. However, we were surprised to see this copy significantly outperformed by an ad that mentioned the ability to study and work at the same time.

The two ads did not constitute a strict A/B test due to the presence of other variables, but they called into question our assumption that prospective students are attracted to university rankings in ad copy, especially given the particularly high ranking of this school. We wanted to perform a test that would provide clarity around the question, “How much do people searching for master’s programs really care about rankings?”

Parameters

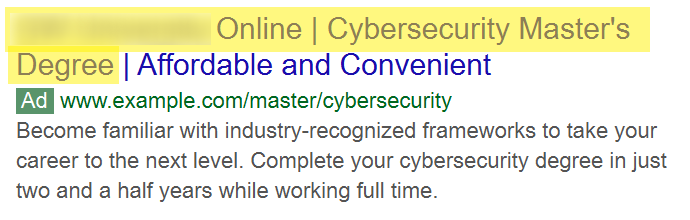

Using new copy, we created a series of formal A/B tests that pitted two Google search ads against each other. We used the expanded text ad (ETA) format, which shows 2-3 short headlines in a large font, along with 1-2 prose descriptions underneath in a smaller font. Below is an example of the format as used in an ETA for AllCampus.

In each test, we used a “ranking ad” that promoted university rankings in a headline and a “control ad” that promoted an alternative value proposition for the program but was otherwise identical. In most cases, the ads differed only in the single ranking/control headline, with one exception outlined in the results section.

In order to have a result that could be broadly applied, we activated ads in a variety of programs from a range of academic disciplines, and we tested the ranking headlines against different alternative headlines in each program.

The tests ran at different intervals between September 30, 2020 and May 18, 2021. All ads were tested within non-brand search campaigns, meaning they showed to individuals searching for the program being offered but not the university showing the ad.

Key Performance Indicators

A number of key performance indicators (KPIs) were used to judge the success of these ads, each giving insight into a different component of performance.

Click-Through Rate (CTR)

This metric is calculated as Clicks / Impressions. It expresses the number of times the ad was selected as a percentage of the number of times it was seen. CTR is often an indication of how appealing the ad is to its audience; the more appealing the ad, the more people are likely to click it.

Conversion Rate (CVR)

Also called click-to-inquiry rate, this metric is calculated as Inquiries / Clicks. It expresses the number of inquiries that were generated as a percentage of clicks on the ad. CVR indicates whether the right individuals within the campaign’s target audience clicked the ad. If an ad is appealing but not to the right people, engagement on the landing page will be low, and CVR will suffer.

Inquiries per 1,000 Impressions (IPM)

This metric is calculated as (Inquiries / Impressions) x 1000. By relating inquiries to impressions directly, this metric creates a composite from both CTR and CVR, giving the net impact of both, which is especially useful when CTR and CVR move in opposite directions. The rate at the base of this metric is multiplied by 1,000 in order to make it easier to understand and compare. While this study is confined to search advertising, IPM can be used for social and display channels as well.

Cost per Inquiry (CPI)

This metric expresses the average investment needed to generate an inquiry. The lower the CPI, the more inquiries AllCampus is able to generate based on a given level of investment.

Results

Test 1

Ranking Ad Lost Decisively

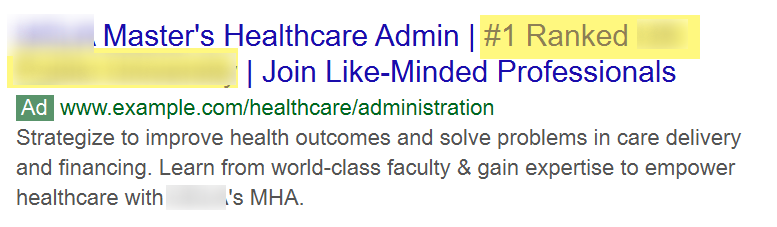

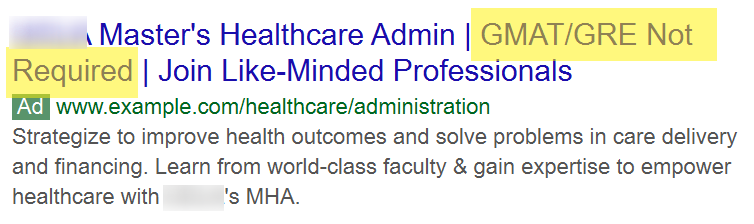

A test for one partner’s online master of health administration program ran from April 14 – May 18, 2021. We tested two ads that were identical with the exception of headline 2, where one ad included the #1 ranking of the university in a particular category and the other had “GMAT/GRE Not Required”.

The chart below reveals worse performance by the ranking ad in every metric. Most notably, it had 39% fewer inquiries per 1,000 impressions (IPM) and a 29% higher cost per inquiry (CPI) than the control ad.

We used a statistical significance calculator to estimate the likelihood that the same ad would win if the test were repeated; the calculator takes into account the size of the sample and the difference in performance between the two ads. In Test 1, there was 99% certainty that the ranking ad would have a lower IPM than the control ad if the test were to be repeated. In other words, moving forward we would expect to be able to generate more inquiries with the control ad, which promoted the accessibility of the application process.

| Ranking Ad vs. Control |

Cost | Impressions | Clicks | Inquiries | Click- Through Rate |

Conversion Rate |

Inquiries per 1000 Impressions |

Cost per Inquiry |

|---|---|---|---|---|---|---|---|---|

| -45% | -29% | -44% | -57% | -21% | -23% | -39% | +29% |

Test 2

Ranking Ad Lost Decisively

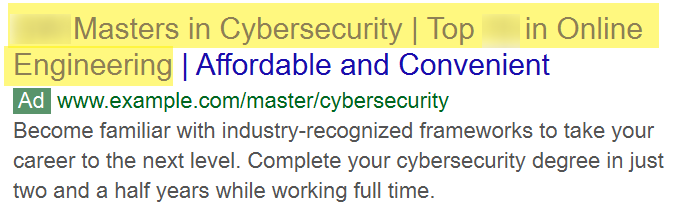

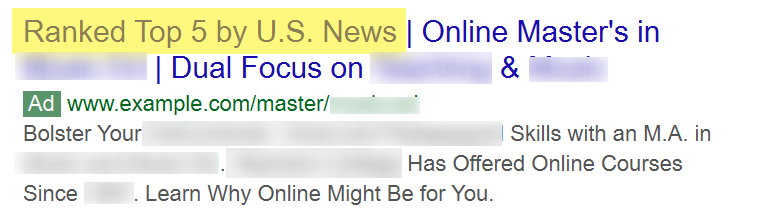

A test for another partner’s online cybersecurity master’s degree programs ran from November 17, 2020 to February 17, 2021. The ranking ad placed the brand and degree name in headline 1 with a ranking in headline 2, whereas the control ad spread the brand and degree names across headlines 1 and 2. Headline 3 and both descriptions were identical.

In essence, the ranking ad was denser and contained more information, whereas the control ad gave greater prominence to the essential information, allowing more real estate for the brand and degree names. We expected that adding information about the university’s strong ranking would be more appealing than not mentioning another value proposition at all; however, the results proved otherwise. The ranking ad fell far short of the control in all key performance indicators with an 82% lower IPM and 169% higher CPI. The margin by which the control ad outperformed the ranking ad once again provided 99% statistical certainty that the ranking ad would have the lower IPM if the test were repeated.

| Ranking Ad vs. Control |

Cost | Impressions | Clicks | Inquiries | CTR | CVR | IPM | CPI |

|---|---|---|---|---|---|---|---|---|

| -55% | -8% | -69% | -83% | -67% | -46% | -82% | +169% |

Test 3

Ranking Ad Lost, But Statistical Significance Is Limited

Our initial test for this same program, the one mentioned in the question section above that spawned this case study, found that the ranking ad had a lower IPM than the control ad and had 95% statistical certainty in a repeated result. However, a variety of assets differed in that test aside from the ranking headline, so we ran a more controlled test to isolate the variable.

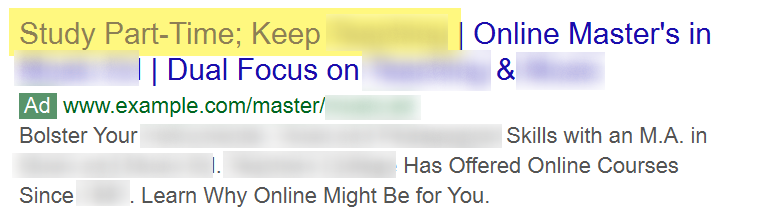

A controlled A/B test used the headline 1 slot to test the school’s top-5 U.S. News ranking in its discipline against a headline asserting that students could complete the degree while working full time. It ran from September 30 to November 4, 2020.

Results of the test favored the control ad. In contrast to the other tests, the ranking ad was the clear winner in terms of CTR, but it again lost substantially in CVR. This caused IPM to be 19% lower and CPI to be 58% higher than for the control ad.

Recall that CTR is viewed as a measure of the ad’s appeal to prospects on the search engine results page while CVR indicates the willingness of the “clickers” to complete an inquiry form on the landing page. In the context of an ad test, a lower CVR indicates that either more of the wrong individuals are clicking the ad or the clickers are being set up not to feel compelled to convert when they arrive at the landing page. One of those two scenarios appeared to be occurring in this test.

Because the number of inquiries gathered during the test was low and there was a relatively close disparity in performance, there was only 66% statistical certainty that the ranking ad would continue to lose in repeated tests. However, the case for the control ad is strengthened by the less formal test conducted for the school previously, which found 95% certainty in the ranking ad losing.

| Ranking Ad vs. Control |

Cost | Impressions | Clicks | Inquiries | CTR | CVR | IPM | CPI |

|---|---|---|---|---|---|---|---|---|

| +148% | +93% | +188% | +57% | +49% | -46% | -19% | +58% |

Test 4

Ranking Ad Lost Decisively

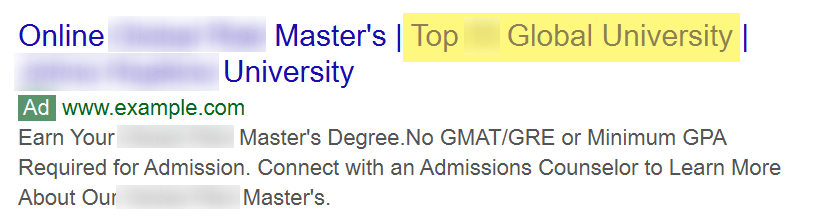

An additional experiment that ran from October 1, 2020 until March 1, 2021 tested ranking and control ads in the second headline slot. In this test, the control ad included “Advance Your Career” rather than a program ranking.

Click-through rate (CTR) was nearly identical, but the much lower landing page conversion rate (CVR) for the ranking ad caused it to have a 67% lower IPM and 175% higher CPI. While the inquiry volume was low for this test, the stark difference in IPM led to 99% statistical certainty in a repeated result. As with the formal experiment conducted in Test 3, the results of this test suggested that lower-quality clicks were being driven to the landing page.

| Ranking Ad vs. Control |

Cost | Impressions | Clicks | Inquiries | CTR | CVR | IPM | CPI |

|---|---|---|---|---|---|---|---|---|

| +63% | +79% | +78% | -41% | +0% | -66% | -67% | +175% |

Aggregate Results

Ranking Ads Lost Decisively

Overall, out of the four tests we ran, the control ad won decisively in three and won in the fourth with lower statistical significance.

Though an aggregate of all the data skews metrics in the direction of the individual tests with higher amounts of impressions, clicks and inquiries, this angle is useful in drawing general conclusions from this study as a whole, especially because total impressions for each test fell within a relatively narrow range. The aggregate numbers show the ranking ad underperforming against the control ad in every KPI.

| Ranking Ad vs. Control |

Cost | Impressions | Clicks | Inquiries | CTR | CVR | IPM | CPI |

|---|---|---|---|---|---|---|---|---|

| -27% | +6% | -21% | -53% | -25% | -41% | -56% | +57% |

Analysis

The primary conclusion that can be drawn from this study is that search ad copy that touts school or university rankings underperforms. This conclusion is bolstered by the fact that a wide variety of wording was used in the ranking headlines tested and a range of alternative value propositions were tested against the ranking headlines.

| Losing Headlines | Winning Headlines |

|---|---|

|

|

On our digital marketing team, these results have spurred a move away from advertising rankings in search ad copy. From viewing the winning headlines, one possible conclusion is that search ad copy that is either informational or prospect/benefit-centric tends to perform better than ranking headlines, which are more university-centric. It is these types of prospect-centric headlines that we are favoring as we move forward with further tests.

An interesting element of this study is that while the control ads had more inquiries per 1,000 impressions in all four tests and higher click-through and conversion rates in the aggregate, the primary KPI driving the high IPM was CVR rather than CTR. In fact, CTR was really only decisively higher in two out of the four tests: in Test 4 it was practically equal, and in Test 3 the ranking ad actually had a higher CTR. The mixed results for CTR suggest that ranking headlines may sometimes pique a prospect’s curiosity about a program, but with their low CVR, they either drive less serious prospects to the landing page or do not create an experience that inspires a strong enough interest in the program to want to submit an inquiry upon viewing the landing page.

At AllCampus, we appreciate the willingness of our partners to follow the data, even if it means a top-ranked university forgoing an opportunity to speak to a potential point of institutional pride. Perhaps this should come as no surprise since our partners tend to be academics who, like us, are inspired and motivated by data and reason. The more efficient we can be in our ad spend, the better the economics are for the university and, in the end, students.

As helpful as these results are to our marketing efforts, we caution against concluding that university rankings are entirely moot in recruitment efforts for master’s programs. They may serve a role in raising awareness about a program via external ranking lists, and they often factor into prospects’ ultimate decisions about which schools to consider. Strong programmatic rankings may also assist universities that are not nationally ranked as a whole in conveying prestige to prospects who may not be aware of the university’s particular strength in a specific field. Since our test focused on school-wide rankings, further testing is required to determine the efficacy of rankings for specific programs or for university/program features such as overall value.

Another use case for rankings is to validate broader marketing claims made elsewhere. For instance, if a compelling headline on a landing page communicates a university’s creativity and innovation, it may not be necessary to reference the school’s ranking in the same headline. The ranking could, however, serve a support role in a lower-profile section dedicated exclusively to rankings and accolades. In other words, it’s likely that rankings are still important evidence points of an institution’s prestige, but they may be better used as supplemental proof rather than as bold claims in search advertisements shown to prospects who are often only forming their first impressions of a program.

Want to learn more about what AllCampus can do for you? Fill out the form below and we’ll be in contact with you soon.